In this use case, Gergely Simon from NXP showcases a streamlined flow for developing and deploying an AI model for road surface classification. Starting with cloud-based prototyping and shared VSS data streams, the same setup is reused for physical deployment, without rework.

Using RemotiveLabs’ virtual development environment, NXP engineers can validate concepts and deploy AI models even before data streams on physical hardware are available, dramatically accelerating development cycles and reducing integration complexity.

“What’s great about this setup is that I can run inference on my AI model using a virtual data playback environment, and when it’s time to switch to physical hardware, I don’t need to start over. The setup stays the same – transitioning takes just five minutes.”

– Gergely Simon, NXP

As a first use case, NXP built a CNN-based model trained on seven VSS signals (speed, acceleration xyz, angular velocity xyz) sampled at 100Hz. Packaged into 3-second inference windows, the model achieves over 90% accuracy in distinguishing road surface types. Prior to setting up a physical CAN datasource, the NXP team used RemotiveCloud and sample CAN recordings to develop and iterate in a lightweight simulation loop, accelerating development and reducing dependencies early in the process.

This developer-focused flow was demonstrated live at COVESA AMM in Berlin in May 2025. Gergely Simon (NXP) with Ola Nilsson (RemotiveLabs), and Carin Lagerstedt (RemotiveLabs) on how cloud-based vehicle data in VSS format enables speed and relevant development work well before hardware is available for enhanced innovation.

NXP’s development flow demonstrates how to move from cloud-based prototyping to physical deployment with minimal effort, using the same data, mappings, and logic throughout.

“I use the sample recordings available in RemotiveCloud, extended by more use-case specific data for early and cost-efficient verification of the AI model’s ability – I select the signals I want, and with 5 lines of Python code, I get the data flowing from RemotiveLabs tooling to the KUKSA data broker”.

– Gergely Simon, NXP

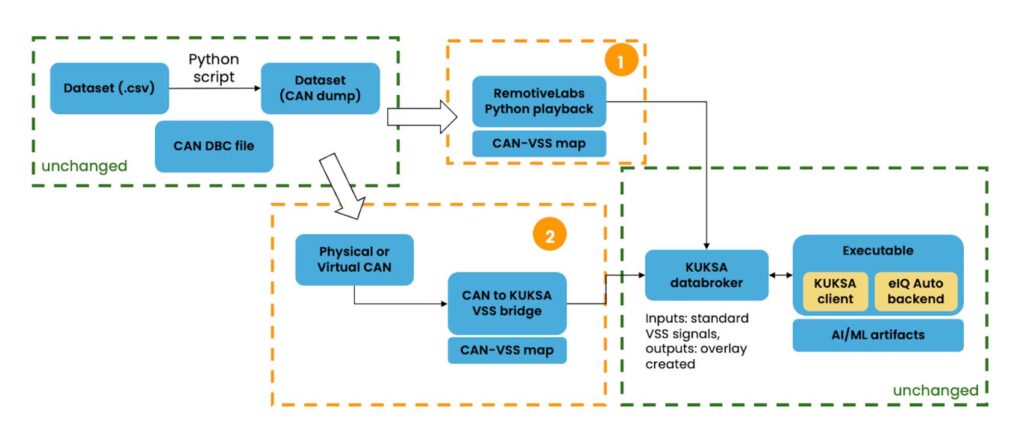

In Stage 1, Recorded CAN data is streamed from RemotiveCloud and then via RemotiveLabs’ Python library the data is fed into the KUKSA data broker. This enables flexible signal parsing, visualization, and AI model development—using only a single CAN-to-VSS mapping file.

When it’s time to move to hardware, Stage 2 reuses the same CAN dump and DBC file. A C-based bridge feeds live physical or virtual CAN data into the same KUKSA setup. This streamlined transition means teams can validate in the cloud and deploy on embedded hardware without rebuilding the pipeline- making innovation faster and integration effortless.

This demo shows how NXP uses RemotiveLabs’ virtual data playback to train and validate an AI model, then seamlessly switches to physical CAN input – keeping the same setup throughout.

With this approach, NXP shows how modern automotive AI development can move faster and stay cleaner. Once the model is trained and validated, the exact same pipeline—CAN dump, DBC file, and VSS map—powers deployment on physical ECUs with no upstream rework. Key benefits:

This is a developer-centric blueprint for scalable, production-ready AI workflows that align with emerging needs across the industry. NXP’s development journey shows what’s possible when modern, cloud-first tools align with open standards like VSS.

Related resources: